Assessment of the FY 2016 Survey of Nonprofit Research Activities to Determine Whether Data Meet Current Statistical Standards for Publication

Disclaimer

Working papers are intended to report exploratory results of research and analysis undertaken by the National Center for Science and Engineering Statistics (NCSES) within the National Science Foundation (NSF). Any opinions, findings, conclusions, or recommendations expressed in this working paper do not necessarily reflect the views of NSF. This working paper has been released to inform interested parties of ongoing research or activities and to encourage further discussion of the topic.

This working paper describes an assessment of the data in the FY 2016 Survey of Nonprofit Research Activities to identify estimates that would meet the NCSES quality criteria for official statistics. Please see the corresponding InfoBrief (https://ncses.nsf.gov/pubs/nsf22337/) and data tables (https://ncses.nsf.gov/pubs/nsf22338/) for the estimates that meet the criteria for NCSES official statistics.

Abstract

The Survey of Nonprofit Research Activities (NPRA Survey) collects information on activities related to research and development that are performed or funded by nonprofits in the United States. The NPRA Survey is part of the data collection portfolio directed by the National Center for Science and Engineering Statistics (NCSES) within the National Science Foundation (NSF). The FY 2016 NPRA Survey was conducted in 2018 with a sample of nonprofit organizations in the United States. The overall response rate was 48% unweighted and 61% weighted. Due to a low response rate, particularly for certain subgroups such as hospitals (35% unweighted and 45% weighted response rate), not all of the NPRA Survey data met NCSES’ criteria for official statistics. NCSES decided to undertake additional assessment in order to determine the subset of NPRA data that would meet the current NCSES statistical standards required for official release. This working paper identifies which data from the FY 2016 NPRA Survey met NCSES’ statistical standards. This document summarizes the steps taken to conduct this additional assessment, and it also includes detailed information on the data quality comparisons.

Introduction

The National Center for Science and Engineering Statistics (NCSES) conducted the Survey of Nonprofit Research Activities (NPRA Survey) to collect information on activities related to research and development that are performed or funded by nonprofits in the United States. A pilot survey was conducted from September 2016 through February 2017 that collected FY 2015 data, and a full implementation of the survey was conducted in 2018 that collected FY 2016 data.

The survey obtained a 48% unweighted response rate overall (61% weighted response rate). However, response rates varied across groups, with the lowest response rate from hospitals (35% unweighted and 45% weighted response rate). Due to high nonresponse rate, not all of the NPRA Survey data meet NCSES’s criteria for official statistics as outlined in the NCSES statistical standards for information products (released in September 2020). At the conclusion of the FY 2016 survey, NCSES decided to proceed with discussing the results via a working paper, which gave caveats that all data did not meet the criteria for official statistics. At the same time, NCSES decided to undertake additional assessment to determine the subset of NPRA Survey data that would meet the current NCSES statistical standards required for official release. This document reflects the results of that additional assessment.

The data quality of the NPRA Survey was assessed based on the Federal Committee on Statistical Methodology (FCSM) Framework for Data Quality. The framework states, “Data quality is the degree to which data capture the desired information using appropriate methodology in a manner that sustains public trust.” Therefore, NCSES’s assessment of the NPRA Survey data is guided by the three FCSM data quality dimensions—utility, objectivity, and integrity.

Utility refers to the extent to which information is well-targeted to identified and anticipated needs; it reflects the usefulness of the information to the intended users. Objectivity refers to whether information is accurate, reliable, and unbiased, and is presented in an accurate, clear and interpretable, and unbiased manner. Integrity refers to the maintenance of rigorous scientific standards and the protection of information from manipulation or influence as well as unauthorized access or revision. (p. 3)

Background

The nonprofit sector is one of four major sectors of the economy (i.e., business, government, higher education, and nonprofit organizations) that perform or fund R&D. Historically, NCSES has combined nonprofit sector data with data from the other three sectors to estimate total national R&D expenditures, which are presented in the annual report National Patterns of R&D Resources. The other three sectors are surveyed annually; however, prior to fielding the pilot NPRA Survey, NCSES had last collected R&D data from nonprofit organizations in 1997. That mail survey was based on a sample of 1,131 nonprofit organizations that were prescreened as performing or funding R&D worth at least $250,000 in 1996. Since the 1997 survey, the National Patterns of R&D Resources has relied on statistical modeling based on the results of the 1996–97 Survey of Research and Development Funding and Performance by Nonprofit Organizations, supplemented by information from the Survey of Federal Funds for Research and Development, to continue estimation of the nonprofit sector’s R&D expenditures.

The primary objective of the NPRA Survey is to fill in data gaps in the National Patterns of R&D Resources in such a way that the data are compatible with the data collected on other sectors of the U.S. economy and are appropriate for international comparisons. The results of the FY 2016 NPRA Survey provide the first estimates of nonprofit R&D activity in the United States since the late 1990s, as well as a better understanding of the scope and nature of R&D in the nonprofit sector.

From a Framework for Data Quality utility perspective, specifically the relevance and timeliness dimensions, the NPRA Survey will improve NCSES’s estimate of total R&D from the nonprofit sector for publication in the National Patterns of R&D Resources. Conducted in 2018, with a FY 2016 reference year, the NPRA Survey provides more current data than the existing source (1997 with annual adjustments). Moreover, the growth of the nonprofit sector highlights the relevancy of these data to accurately measure the share of R&D from the nonprofit sector.

Summary of NPRA Survey Methodology

A sample of nonprofit organizations was selected from the Internal Revenue Service (IRS) Exempt Organizations Business Master File Extract. Organizations filing Form 990 (public charities) and Form 990-PF (private foundations) were eligible. Organizations were stratified based on their estimated likelihood of performing or funding research. The stratification included a set of organizations “known” to perform or fund research since they were identified as performers or funders in auxiliary data sources, including past survey data from NCSES (2010–13 Survey of Federal Science and Engineering Support to Universities, Colleges, and Nonprofit Institutions, 2009 Survey of Science and Engineering Research Facilities, and 1996 and 1997 Survey of Research and Development Funding and Performance by Nonprofit Organizations), association and society memberships (Association of Independent Research Institutes, Consortium of Social Science Associations, Science Philanthropy Alliance, Health Research Alliance), and other sources (affiliates of the Higher Education Research and Development Survey, Grant Station Funder Database, and sources discovered through cognitive interviews). These organizations were selected with certainty.

The NPRA Survey staff attempted to contact U.S.-based nonprofit organizations using a two-phase approach to obtain information about whether the organization performed or funded research. Some organizations’ performer and funder status were known based on auxiliary data sources (see previous paragraph). Organizations in the sample with an unknown performer or funder status were sent a screener card in phase 1, which began in February 2018.

Phase 2, which began in April 2018, included the phase 1 organizations that reported either performing or funding R&D during phase 1 (including those that did not respond) and organizations with a known performer or funder status through sources external to the survey. Performer and funder status (i.e., whether an organization performed research, funded research, or both in FY 2016) was then confirmed in phase 2, and organizations that either performed or funded research were asked to complete additional questions about their research activities. Performer or funder status could have been obtained in either data collection phase 1 or phase 2, but research activity questionnaires were only completed in phase 2.

Overall response rates were calculated by using best practices established by the American Association for Public Opinion Research (https://www.aapor.org/Standards-Ethics/Standard-Definitions-(1).aspx). Organizations that reported no R&D activity in phase 1 or phase 2 were considered complete surveys and were included in the numerator of response rate calculations. Organizations that reported performing or funding R&D in phase 1 or phase 2 and completed some or all of the full questionnaire, with a minimum of the total amounts answered (Q9 and Q16), were considered complete or partial surveys and were included in the numerator of response rate calculations. Organizations that reported performing or funding in phase 1 but did not complete the phase 2 questionnaire were not included in the numerator of response rate calculations.

Imputation was conducted for organizations that reported that they performed or funded research (in phase 1 or phase 2) but did not provide information on the amounts spent in the full questionnaire. These organizations were considered nonrespondents. The imputation included substituted values using auxiliary data about the amounts spent performing or funding research (including information reported in annual reports and IRS filings, as well as information from the pilot survey), information from the pilot survey, and model-based imputations. The imputed amount represented about 30% of the overall total amount performing R&D, with 20% from auxiliary data and the pilot and 10% from the imputation model. Nonresponse weights were used to account for organizations that did not respond about their performing or funding status in either phase 1 or phase 2. These organizations were considered nonrespondents. After reviewing nonresponse adjustment alternatives by using total expenses and total organizations, the nonresponse adjustment was ultimately based on a ratio estimator using total expenses considering its correlation with the survey outcomes of total R&D performance (0.49) and funding (0.27).

NPRA Survey Adherence to NCSES Statistical Standards

NCSES has a set of statistical standards for the release of “official statistics,” specifically

Standard 9.2: The statistical quality of official statistics must undergo rigorous program review and statistical review and approved by the chief statistician for releasing.

Guideline 9.2a: The reliability of official statistics must meet the following quality criteria:

- Top line estimates have a coefficient of variation (CV) < 5%.

- The estimated CV for the majority of the key estimates is ≤ 30%.

Guideline 9.2b: The indicators of accuracy of official statistics must meet the following quality criteria:

- Unit response rates >60%.

- Item response rates >70%.

- Coverage ratios for population groups associated with key estimates are >70%.

- Above thresholds may not apply if nonresponse bias analyses are at an acceptable level.

The NCSES standards focus on the aspects of accuracy, including response rates, data missingness, and frame coverage as well as precision. These elements align with the accuracy and reliability dimension of the objectivity domain. Demonstrating that the NPRA Survey produces accurate and reliable estimates of total R&D performing and funding is the primary focus of this assessment. Therefore, we summarize the metrics as compared to the standard in table 1 and follow with a more detailed description for each standard.

FY 2016 NPRA Survey adherence to standards

CV = coefficient of variation; NPRA Survey = Survey of Nonprofit Research Activities.

a Does not include imputation variance. Amount of performance and funding was imputed for organizations that confirmed that they fund or perform research but did not provide the amount. No imputation was conducted for total expenses, performance status, or funding status.

b Includes imputations using substitutions based on auxiliary data.

Note(s):

For the CV, the total number of responding organizations is 3,254. When evaluating total expenses, the CV is 0.5%, well below the 5% CV standard. However, the sample was designed to optimize total expenses, so other estimates are expected to have higher variability. The CV for the proportion of performing organizations and proportion of funding organizations are 12%. The high CVs are largely due to the low proportions of nonprofits that reported performing and funding R&D, 6% (+/−1.4%) and 4% (+/−1.0%) respectively. However, the confidence intervals are small for both of these estimates. The CVs for the mean performance and mean funding are both 8%. These CVs, based on a subset of organizations that indicated they perform and fund research, meet the CV standard of 30% for the majority of key estimates but not the CV standard of 5% for the top line estimates. Since imputation is a separate criterion, these CV estimates are based only on sampling variance. Table 2 includes additional CVs for total performance and funding amounts by source, R&D type, and field as well as the mean number of full-time equivalents. All but nine of the estimates meet the standard of a 30% CV for key estimates.

Source(s):

National Center for Science and Engineering Statistics, Survey of Nonprofit Research Activities, FY 2016.

CV. The total number of responding organizations is 3,254. When evaluating total expenses, the CV is 0.5%, well below the 5% CV standard. However, the sample was designed to optimize total expenses, so other estimates are expected to have higher variability. The CVs for the proportion of performing organizations and proportion of funding organizations are 12%. The high CVs are largely due to the low proportions of nonprofits that reported performing and funding R&D, 6% (+/−1.4%) and 4% (+/−1.0%), respectively. However, the confidence intervals are small for both of these estimates. The CVs for the mean performance and mean funding are both 8%. These CVs, based on a subset of organizations that indicated they perform and fund research, meet the CV standard of 30% for the majority of key estimates but not the CV standard of 5% for the top line estimates. Since imputation is a separate criterion, these CV estimates are based only on sampling variance. Table 2 includes additional CVs for total performance and funding amounts by source, R&D type, and field as well as the mean number of full-time equivalents. All but nine of the estimates meet the standard of a 30% CV for key estimates.

Total amount spent on R&D by nonprofits that performed or funded R&D and coefficient of variation of FY 2016 NPRA Survey, by source, R&D type, and field

NPRA Survey = Survey of Nonprofit Research Activities.

a Does not include imputation variance.

Note(s):

Details for full-time equivalent do not add to total because of missing data.

Source(s):

National Center for Science and Engineering Statistics, Survey of Nonprofit Research Activities, FY 2016.

Coverage ratio. Based on analysis conducted during the sampling, 85% of performers and 88% of funders are estimated to be covered in the frame, which is well above the NCSES threshold of 70% coverage. The frame coverage estimates are based on an analysis of the percentage of “likely” performers and “likely” funders on the frame after exclusions based on type of organization (e.g., educational institutions or churches) and the size truncation based on total expenses for public charities or total assets for private foundations. The exclusions and truncation were conducted to increase the efficiency of finding R&D performers and funders. Since the exclusions focused on organization types that are not likely to perform or fund or are small in terms of overall expenses or assets, the undercoverage most likely has little impact on the estimated total R&D performance and funding.

Unit response rate. The unweighted response rate (48%) falls short of the 60% standard. The weighted response rate (61%), which uses the sampling weights for all 6,373 sample organizations (6,071 identified as eligible), does meet the standard. The difference in the unweighted and weighted response rates means that the average weight for responders (mean weight = 23.6) is higher than that for nonresponders (14.2). Unweighted and weighted response rates provide distinct measures of survey quality. Unweighted response rates are an indicator of success with data collection operations. Weighted response rates are most useful when the sampling fractions vary across strata in a sample design and when the interest is population-level inference about the amount of information captured from the population sampled.

Both unweighted and weighted response rates are important metrics for evaluating the quality of the data. Although the weighted response rate meets the standard, the unweighted response rate does not. Therefore, additional analysis measuring the impact of nonresponse is required.

Item response rate. The amount of imputation for performing status (28%) and funding status (17%) meets the minimum threshold for item response, as does the imputation for funding amount (26%). However, the performing amount is just below the standard with 32% of the responses imputed (289 of 912).

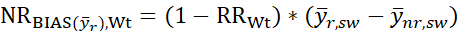

Nonresponse bias. The response rate and the item response rate evaluations both include metrics that do not meet the NCSES quality standards. The standards state that the nonresponse standard threshold may be exceeded if the estimate for nonresponse bias is acceptable. The nonresponse bias is a function of the weighted response rate and the weighted mean difference between the respondents and nonrespondents is as follows:

where

is the weighted response rate,

is the weighted respondent mean, and

is the weighted nonrespondent mean.

The estimated nonresponse bias was a mean bias of −4 million for total expenses (overall mean = 13, respondent mean = 9). This was a relative bias of −0.451 (table 3). This result suggests that organizations with higher amounts of expenses were less likely to respond than those with lower total expenses. This is confirmed from the response rates for deciles based on total expenses presented in table 4. The lowest response rates occur in deciles 9 and 10, the organizations with the highest amount of expenses. Over 50% of the organizations in these deciles are hospitals, the organization type experiencing lowest response. When removing organizations in deciles 9 and 10, the relative bias is decreased to −0.112 (data not shown), demonstrating that the largest organizations contribute the majority of bias in the total.

Estimated bias of expenses, assets, and revenues in the FY 2016 NPRA Survey

NPRA Survey = Survey of Nonprofit Research Activities.

a The sample analyzed in this this table excludes 302 cases identified as being out of sample. In addition, 31 organizations had missing expenses and revenues; 32 had missing assets.

Source(s):

National Center for Science and Engineering Statistics, Survey of Nonprofit Research Activities, FY 2016.

The imputation and nonresponse adjustments were informed by the nonresponse bias analysis. The imputations were prioritized based on previous data from the pilot survey as well as administrative data for the largest organizations. These substitutions reduced the bias of total expenses to −0.336. Further, the imputation model predicting the amount of performance and funding based on total expenses reduces the nonresponse bias to −0.169 for total expenses.

To address higher nonresponse for hospitals and larger organizations, the nonresponse adjustment cells included hospitals and a flag identifying the largest 500 organizations in terms of expenses. The nonresponse adjustment was based on a ratio adjustment using total expenses. Therefore, the bias is 0 when evaluating weighted nonresponse bias in terms of total expenses. Further, if successful, the nonresponse adjustment based on expenses will reduce the nonresponse bias for correlated measures such as assets and revenues. The relative bias in assets (−0.403) and revenues (−0.414) are similarly high based on the full and partially completed surveys. After weighting, the nonresponse bias is reduced to +0.046 for total assets and +0.014 for total revenues.

Considering that hospitals and health organizations tended to have lower response rates, an indicator for hospitals and non-hospitals was included when defining the nonresponse adjustment cells. The result was low relative bias for health and medical organizations when measuring total expenses (−0.04), total assets (−0.03), and total revenues (−0.04) (data not shown).

In summary, although the nonresponse bias is high, the information available on the nonrespondents allows for imputation and nonresponse adjustments to address these observed biases. Assuming R&D performance and funding expenditures are correlated with the size of the organization based on expenses, the risk of nonresponse bias is reduced. This is the case when measuring assets and revenues, which were not used in the weighting but are both correlated with expenses. We cannot directly measure the reduction in the bias for total performing and total funding since we do not have data for nonrespondents. However, we can evaluate the correlation of expenses and R&D performance and funding using the respondents to the survey. Table 4 provides the mean R&D performance and R&D funding for each expense decile. Both increase as the deciles of total expenses increase. The log-log imputation models used for the NPRA Survey are further evidence of a relationship between expenses and R&D performance and funding, where total expenses was a significant predictor for both models, 0.54 (standard error = 0.08) for total performing and 0.47 (standard error = 0.09) for total funding.

Response rate of nonprofits to the FY 2016 NPRA Survey, by expense decile, and percentage of hospitals in each expense decile

NPRA Survey = Survey of Nonprofit Research Activities.

Note(s):

Decile 1 has the least number of expenses, and the decile 10 has the most expenses.

Source(s):

National Center for Science and Engineering Statistics, Survey of Nonprofit Research Activities, FY 2016.

Recommendations for Data Tables to Publish as Official Statistics

The final domain of the Framework for Data Quality is the integrity domain, which focuses on the data producer and the unbiased development of data that instills confidence in its accuracy. This assessment and the rigorous review of these data before publication speak directly to the scientific integrity and credibility dimensions:

Scientific integrity refers to an environment that ensures adherence to scientific standards, use of established scientific methods to produce and disseminate objective data products, and protection of these products from inappropriate political influence.

Credibility characterizes the confidence that users place in data products based simply on the qualifications and past performance of the data producer.

The NPRA Survey was designed to provide timely and relevant data to meet a long-standing need for information on R&D expenditures within the nonprofit sector. As noted in this report, low response rates called into question the quality of the data. The quality assessment included a thorough review of the error sources, including sampling error, response rates, frame coverage error, item nonresponse, and nonresponse bias. Further, the assessment reviewed the post-survey adjustments (e.g., weighting and imputation) designed to mitigate the risk of bias. This process of additional assessment identified which data from the NPRA Survey meet the NCSES statistical standards and illuminated the aspects of the data that should not be published.

Based on this additional assessment, we recommend publishing a set of high-level summary data tables, shown in appendix A, as NCSES official statistics. The tables cover all categories in table 2 except field, due to the high CVs for several fields. Variance estimates are based on successive difference replication using 80 replicates. The CVs in the appendix tables include both the sampling variance and imputation variance. All estimates are under the CV standard of 30%.

As part of the post survey evaluation report, we evaluated the stability of the variance estimates by comparing variance estimates with 80 replicates to the variance estimates based on 160 replicates. The CV for the total R&D performance was 13.2% for 80 replicates and 13.6% for 160 replicates. The CV for the total R&D funding was 18.0% for 80 replicates and 16.1% for 160 replicates. For the estimates in appendix A, three estimates exceeded the 30% CV standard when using 160 replicates instead of 80 replicates. These included the following:

Appendix table 1-A. Total R&D expenditures sourced from nonprofits (31%)

Appendix table 7-A. Total funds for R&D from internal funds (CV = 31%)

Appendix table 9-A. Nonfederal funds for R&D for other nonprofit organizations (CV = 34%)

All other estimates have a CV under 30%.

Specifically, this subset of FY 2016 NPRA data will be published via a Data Release InfoBrief and a set of data tables (https://ncses.nsf.gov/pubs/nsf22337/ and https://ncses.nsf.gov/pubs/nsf22338/). Technical notes published with the data tables will also be provided to summarize the survey methodology and the data limitations. The technical notes will reference this working paper where additional survey information is provided, including details about the initial assessment of the NPRA survey estimates.

Notes

1An organization is considered a nonprofit if it is categorized by the Internal Revenue Service as a 501(c)(3) public charity, a 501(c)(3) private foundation, or another exempt organization—e.g., a 501(c)(4), 501(c)(5), or 501(c)(6).

2The pilot survey data were provided by the respondents for testing purposes only and were not published.

3Britt R, Jankowski J; National Center for Science and Engineering Statistics (NCSES). 2021. FY 2016 Nonprofit Research Activities Survey: Summary of Methodology, Assessment of Quality, and Synopsis of Results. Working Paper NCSES 21-202. Alexandria, VA: National Science Foundation, National Center for Science and Engineering. Available at https://www.nsf.gov/statistics/2021/ncses21202/.

4Federal Committee on Statistical Methodology. 2020. A Framework for Data Quality. FCSM 20-04. Available at https://nces.ed.gov/fcsm/pdf/FCSM.20.04_A_Framework_for_Data_Quality.pdf.

5The nonprofit sector includes nonprofit organizations other than government or academia. R&D performed by nonprofits that receive federal funds is reported on in the Survey of Federal Funds for Research and Development. R&D performed by higher education nonprofits is reported on in the Higher Education Research and Development Survey.

6Throughout this document, the term “research” is synonymous with “research and development” or “R&D.”

7For more information on lessons learned regarding data collection operations, see the Working Paper, FY 2016 Nonprofit Research Activities Survey: Summary of Methodology, Assessment of Quality, and Synopsis of Results at https://www.nsf.gov/statistics/2021/ncses21202/#chp5.

Appendix A. Data Tables with Relative Standard Errors

Suggested Citation

Britt R, Mamon S, ZuWallack R; National Center for Science and Engineering Statistics (NCSES). 2022. Assessment of the FY 2016 Survey of Nonprofit Research Activities to Determine Whether Data Meet Current Statistical Standards for Publication. Working Paper NCSES 22-212. Alexandria, VA: National Science Foundation. Available at https://ncses.nsf.gov/pubs/ncses22212/.

Contact Us

NCSES

National Center for Science and Engineering Statistics

Directorate for Social, Behavioral and Economic Sciences

National Science Foundation

2415 Eisenhower Avenue, Suite W14200

Alexandria, VA 22314

Tel: (703) 292-8780

FIRS: (800) 877-8339

TDD: (800) 281-8749

E-mail: ncsesweb@nsf.gov

An official website of the United States government

An official website of the United States government